In previous entries we have described several implementations of C++ unordered associative containers

without and

with duplicate elements. Subsequent performance measurements have taught us how important algorithm locality is in the presence of CPU memory caches, which favor data structures with as few node indirections as possible. This suggests an improvement over the implementation introduced by Boost.MultiIndex hashed indices for Boost 1.56. In the case of unique elements, the data structure looked like this:

Let us now twist this structure a bit:

This new scheme results from the old one by merely swapping forward and back pointers (bucket nodes are equipped then with back pointers), which at first blush seems to gain us nothing in terms of efficiency. But there is a key difference: going from a bucket node to the first bucket element is now done directly, rather than with an indirection through the preceding node. Previously, traversing a bucket of n elements required visiting 1 bucket node and n + 2 element nodes; with the new implementation, 2 bucket nodes and n element nodes, one less node in total (and visiting bucket nodes is expected to be cheaper, as they lie contiguously in an array and are thus better cached). Similarly, insertion of an element at the beginning of a nonempty bucket involves visiting 2 nodes while formerly it involved 3, and if the bucket is empty (this is harder to see) it is 4 vs. 5 nodes.

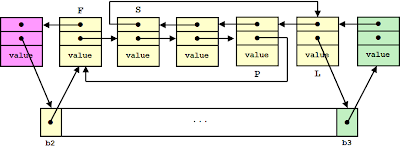

The same change can be easily applied to the case of duplicate elements, from:

to

This seemingly small modification brings about substantial improvements in performance. We have repeated the measurements done in the series of articles

under the same conditions as described there. For the reader's reference, we provide snaphots to

- Boost.MultiIndex for Boost 1.56, prerelease 1 (the one used for the first set of measurements),

- Boost.MultiIndex for Boost 1.56, prerelease 2, (with the changes proposed in this entry).

Besides the change in data structure, prerelease 2 of Boost.MultiIndex also includes some improvements in its rehashing procedure. The new results are depicted below.

|

| Insertion without rehashing, unique elements. |

|

| Insertion with rehashing, unique elements. |

|

| Insertion without rehashing, duplicate elements, Fmax = 1, G = 5. |

|

| Insertion without rehashing, duplicate elements, Fmax = 5, G = 5. |

|

| Insertion with rehashing, duplicate elements, Fmax = 1, G = 5. |

|

| Insertion with rehashing, duplicate elements, Fmax = 5, G = 5. |

|

| Erasure, unique elements. |

|

| Erasure, duplicate elements, Fmax = 1, G = 5. |

|

| Erasure, duplicate elements, Fmax = 5, G = 5. |

|

| Successful lookup, unique elements. |

|

| Unsuccessful lookup, unique elements. |

|

| Successful lookup, duplicate elements, Fmax = 1, G = 5. |

|

| Unsuccessful lookup, duplicate elements, Fmax = 1, G = 5. |

|

| Successful lookup, duplicate elements, Fmax = 5, G = 5. |

|

| Unsuccessful lookup, duplicate elements, Fmax = 5, G = 5. |

Note that implementations of C++ unordered associative containers based on

single linking cannot apply this improvement, that is, they cannot make bucket nodes point directly to the first element in the bucket: if they did so, the node preceding the first in a bucket would be unreachable in constant time, making it impossible to efficiently implement erasure.