- Goals of standardization

- Standardizing programming languages

- WG21

- An assessment model for library standardization

- Boost, the standard and beyond

- Conclusions

Goals of standardization

Standardization, in a form resembling our contemporary practices, began in the Industrial Revolution as a means to harmonize incipient mass production and their associated supply chains through the concept of interchangeability of parts. Some early technical standards are the Gribeauval system (1765, artillery pieces) and the British Standard Whitworth (1841, screw threads). Taylorism expanded standardization efforts from machinery to assembly processes themselves with the goal of increasing productivity (and, it could be said, achieving interchangeability of workers). Standards for metric systems, such as that of Revolutionary France (1791) were deemed "scientific" (as befitted the enlightenment spirit of the era) in that they were defined by exact, reproducible methods, but their main motivation was to facilitate local and international trade rather than support the advancement of science. We see a common theme here: standardization normalizes or leverages technology to favor industry and trade, that is, technology precedes standards.

This approach is embraced by 20th century standards organizations (DIN 1917, ANSI 1918, ISO 1947) through the advent of electronics, telecommunications and IT, and up to our days. Technological advancement, or, more generally, innovation (a concept coined around 1940 and ubiquitous today) is not seen as the focus of standardization, even though standards can promote innovation by consolidating advancements and best practices upon which further cycles of innovation can be built —and potentially be standardized in their turn. This interplay between standardization and innovation has been discussed extensively within standards organizations and outside. The old term "interchangeability of parts" has been replaced today by the more abstract concepts of compatibility, interoperability and (within the realm of IT) portability.

Standardizing programming languages

Most programming languages are not officially standardized, but some are. As of today, these are the ISO-standardized languages actively maintained by dedicated working groups within the ISO/IEC JTC1/SC22 subcommittee for programming languages:

- COBOL (WG4)

- Fortran (WG5)

- Ada (WG9)

- C (WG14)

- Prolog (WG17)

- C++ (WG21)

What's the purpose of standardizing a programming language? JC22 has a sort of foundational paper which centers on the benefits of portability, understood as both portability across systems/environments and portability of people (a rather blunt allusion to old-school Taylorism). The paper does not mention the subject of implementation certification, which can play a significant role for languages such as Ada that are used in heavily regulated sectors. More importantly to our discussion, it does not either mention what position SC22 holds with respect to innovation: regardless, we will see that innovation does indeed happen within SC22 workgroups, in what represents a radical departure from classical standardization practices.

WG21

C++ was mostly a one man's effort since its inception in the early 80s until the publication of The Annotated C++ Reference Manual (ARM, 1990), which served as the basis for the creation of an ANSI/ISO standardization committee that would eventually release its first C++ standard in 1998. Bjarne Stroustrup cited avoidance of compiler vendor lock-in (a variant of portability) as a major reason for having the language standardized —a concern that made much sense in a scene then dominated by company-owned languages such as Java.

Innovation was seen as WG21's business from its very beginning: some features of the core language, such as templates and exceptions, were labeled as experimental in the ARM, and the first version of the standard library, notably including Alexander Stepanov's STL, was introduced by the committee in the 1990-1998 period with little or no field experience. After a minor update to C++98 in 2003, the innovation pace picked up again in subsequent revisions of the standard (2011, 2014, 2017, 2020, 2023), and the current innovation backlog does not seem to falter; if anything, we could say that the main blocker for innovation within the standard is lack of human resources in WG21 rather than lack of proposals.

Innovation vs. adoption

Not all new features in the C++ standard have originated within WG21. We must distinguish here between the core language and the standard library:

- External innovation in the core language is generally hard as it requires writing or modifying a C++ compiler, a task outside the capabilities of many even though this has been made much more accessible with the emergence of open-source, extensible compiler frameworks such as LLVM. As a result, most innovation activity here happens within WG21, with some notable exceptions like Circle and Cpp2. Others have chosen to depart from the C++ language completely (Carbon, Hylo), so their potential impact on C++ standardization is remote at best.

- As for the standard library, the situation is more varied. These are some examples:

- Straight into the standard: <locale>.

- Straight into the standard, some prior art: IOStreams, STL, unordered associative containers.

- Straight into the standard, extensive prior art: std::string_view.

- Explicitly written for standardization, one reference implementation: mdspan.

- Explicitly written for standardization, various reference implementations: ranges, std::expected.

- Moderate to high level of prior field experience: Boost.Filesystem, Boost.Regex, Boost.SmartPtr, Boost.Thread, Boost.Tuple.

- Not initially intended for standardization, high level of prior field experience: {fmt}.

Pros and cons of standardization

The history of C++ standardization has met with some resounding successes (STL, templates, concurrency, most vocabulary types) as well as failures (exported templates, GC support, exception specifications, std::auto_ptr) and in-between scenarios (std::regex, ranges).

Focusing on the standard library, we can identify benefits of standardization vs. having a separate, non-standard component:

- The level of exposure to C++ users increases dramatically. Some companies have bans on the usage of external libraries, and even if no bans are in place, consuming the standard library is much more convenient than having to manage external dependencies —though this is changing.

- Standardization ensures a high level of (system) portability, potentially beyond the reach of external library authors without access to exotic environments.

- For components with high interoperability potential (think vocabulary types), having them in the standard library guarantees that they become the tool of choice for API-level module integration.

But there are drawbacks as well that must be taken into consideration:

- The evolution of a library halts or reduces significantly once it is standardized. One major factor for this is WG21's self-imposed restriction to preserve backwards compatibility, and in particular ABI compatibility. For example:

- Defects on the API of std::function had to be fixed by adding a new std::copyable_function component.

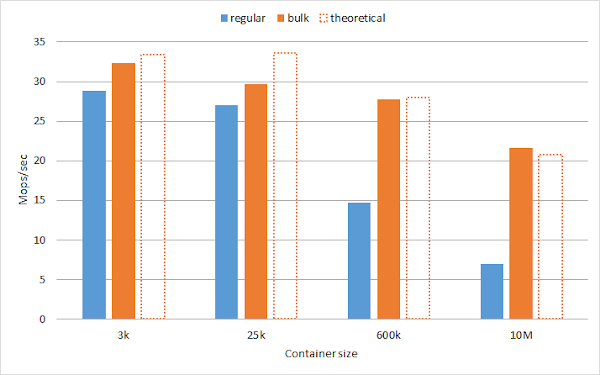

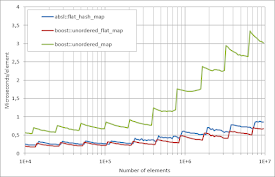

- Unordered associative containers were specified with the explicit assumption that they should be based on a technique known as separate chaining/closed addressing. The state of the art in this area has evolved spectacularly since 2003, and modern hash table implementations mostly use open addressing, which is de facto forbidden by the standard API, and outperform std::unordered_(map|set) by a large factor.

- Some libraries cover specialized domains that standard library implementors cannot be expected to master. Some cases in point:

- Current implementations of std::regex are notoriously slower than Boost.Regex, a situation aggravated by the need to keep ABI compatibility.

- Correct and efficient implementations of mathematical special functions require ample expertise in the area of numerical computation. As a result, Microsoft standard library implements these as mere wrappers over Boost.Math, and libc++ seems to be following suit. This is technically valid, but begs the question of what the standardization of these functions was useful for to begin with.

- Additions to the upcoming standard (as of this writing, C++26) don't benefit users immediately because the community typically lags behind by two or three revisions of the language.

So, standardizing a library component is not always the best course of action for the benefit of current and future users of that component. Back in 2001, Stroustrup remarked that "[p]eople sometime forget that a library doesn't have to be part of the standard to be useful", but, to this day, WG21 does not seem to have formal guidelines as to what constitutes a worthy addition to the standard, or how to engage with the community in a world of ever-expanding and more accessible external libraries. We would like to contribute some modest ideas in that direction.

An assessment model for library standardization

Going back to the basic principles of standards, the main benefits to be derived from standardizing a technology (in our case, a C++ library) are connected to higher compatibility and interoperability as a means to increase overall productivity (assumedly correlated to the level of usage of the library within the community). Leaving aside for the moment the size of the potential target audience, we identify two characteristics of a given library that make it suitable for standardization:

- Its portability requirements, defined as the level of coupling that an optimal implementation has with the underlying OS, CPU architecture, etc. The higher these requirements the most sense it makes to include the library as a mandatory part of the standard.

- Its interoperability potential, that is, how much the library is expected to be used as part of public APIs interconnecting different program modules vs. as a private implementation detail. A library with high interoperability potential is maximally useful when included in the common software "stack" shared by the community.

So, the baseline standardization value of a library, denoted V0, can be modeled as:

V0 = aP + bI,

where P denotes the library's portability requirements and I its interoperability potential. The figure shows the baseline standardization value of some library domains within the P-I plane. The color red indicates that this value is low, green that it is high.

A low baseline standardization value for a library does not mean that the library is not useful, but rather that there is little gain to be obtained from standardizing it as opposed to making it available externally. The locations of the exemplified domains in the P-I plane reflect the author's estimation and may differ from that of the reader.

Now, we have seen that the adoption of a library requires some prior field experience, defined as

E = T·U,

where T is the age of the library and U is average number of users.

- When E is very low, the library is not mature enough and standardizing it can result in a defective design that will be much harder to fix within the standard going forward; this effectively decreases the net value of standardization.

- On the contrary, if E is very high, which is correlated to the library having already reached its maximum target audience, the benefits of standardization are vanishingly small: most people are already using the library and including it into the official standard has little value added —the library has become a de facto standard.

So, we may expect to attain an optimum standardization opportunity S between the extremes E = 0 and Emax.

Finally, the net standardization value of a library is defined as

V = V0·S·Umax,

where Umax is the library's maximum target audience. Being a conceptual model, the purpose of this framework is not so much to establish a precise evaluation formula as to help stakeholders raise the right questions when considering a library for standardization:

- How high are the library's portability requirements?

- How high its interoperability potential?

- Is it too immature yet? Does it have actual field experience?

- Or, on the contrary, has it already reached its maximum target audience?

- How big is this audience?

Boost, the standard and beyond

Boost was launched in 1998 upon the idea that "[a] world-wide web site containing a repository of free C++ class libraries would be of great benefit to the C++ community". Serving as a venue for future standardization was mentioned only as a secondary goal, yet very soon many saw the project as a launching pad towards the standard library, a perception that has changed since. We analyze the different stages of this 25+-year-old project in connection with its contributions to the standard and to the community.

Golden era: 1998-2011

In its first 14 years of existence, the project grew from 0 to 113 libraries, for a total uncompressed size of 324 MB. Out of these 113 libraries, 12 would later be included in C++11, typically with modifications (Array, Bind, Chrono, EnableIf, Function, Random, Ref, Regex, SmartPtr, Thread, Tuple, TypeTraits); it may be noted that, even at this initial stage, most Boost libraries were not standardized or meant for standardization. From the point of view of the C++ standard library, however, Boost was the first contributor by far. We may venture some reasons for this success:

- There was much low-hanging fruit in the form of small vocabulary types and obvious utilities.

- Maybe due to a combination of scarce competition and sheer luck, Boost positioned itself very quickly as the go-to place for contributing and consuming high-quality C++ libraries. This ensured a great deal of field experience with the project.

- Many of the authors of the most relevant libraries were also prominent figures within the C++ community and WG21.

Middle-age issues: 2012-2020

By 2020, Boost had reached 164 libraries totaling 717 MB in uncompressed size (so, the size of the average library, including source, tests and documentation, grew by 1.5 with respect to 2011). Five Boost libraries were standardized between C++14 and C++20 (Any, Filesystem, Math/Special Functions, Optional, Variant): all of these, however, were already in existence before 2012, so the rate of successful new contributions from Boost to the standard decreased effectively to zero in this period. There were some additional unsuccessful proposals (Mp11).

The transition of Boost from the initial ramp-up to a more mature stage met with several scale problems that impacted negatively the public perception of the project (and, to some extent that we haven't able to determine, its level of usage). Of particular interest is a public discussion that took place in 2022 on Reddit and touched on several issues more or less recognized within the community of Boost authors:

- The default/advertised way to consume Boost as a monolithic download introduces a bulky, hard to manage dependency on projects.

- B2, Boost's native build technology, is unfamiliar to users more accustomed to widespread tools such as CMake.

- Individual Boost libraries are perceived as bloated in terms of size, internal dependences and compile times. Alternative competing libraries are self-contained, easier to install and smaller as they rely on newer versions of the C++ standard.

- Many useful components are already provided by the standard library.

- There are great differences between libraries in terms of their quality; some libraries are all but abandoned.

- Documentation is not good enough, in particular if compared to cppreference.com, which is regarded as the golden standard in this area.

A deeper analysis reveals some root causes for this state of affairs:

- Overall, the Boost project is very conservative and strives not to break users' code on each version upgrade (even though, unlike the standard, backwards API/ABI compatibility is not guaranteed). In particular, many Boost authors are reluctant to increase the minimum C++ standard version required for their libraries. Also, there is no mechanism in place to retire libraries from the project.

- Supporting older versions of the C++ standard locks in some libraries with suboptimal internal dependencies, the most infamous being Boost.MPL, which many identify (with or without reason) as responsible for long compile times and cryptic error messages.

- Boost's distribution and build mechanisms were invented in an era where package managers and build systems were not prevalent. This works well for smaller footprints but presents scaling problems that were not foreseen at the beginning of the project.

- Ultimately, Boost is a federation of libraries with different authors and sensibilities. This fact accounts for the various levels of documentation quality, user support, maintenance, etc.

Some of these characteristics are not negative per se, and have in fact resulted in an extremely durable and available service to the C++ community that some may mistakenly take for granted. Supporting "legacy C++" users is, by definition, neglected by WG21, and maintaining libraries that were already standardized is of great value to those who don't live on the edge (and, in the case of the std::regex fiasco, those who do). Confronted with the choice of serving the community today vs. tomorrow (via standardization proposals), the Boost project took, perhaps unplannedly, the first option. This is not to say that all is good with the Boost project, as many of the problems found in 2012-2020 are strictly operational.

Evolution: 2021-2024 and the future

Boost 1.85 (April 2024) contains 176 libraries (7% increase with respect to 2020) and has a size of 731 MB (2% increase). Only one Boost component has partially contributed to the C++23 standard library (boost::container::flat_map), though there has been some unsuccessful proposals (the most notable being Boost.Asio).

In response to the operational problems we have described before, some authors have embarked on a number of improvement and modernization tasks:

- Beginning in Boost 1.82 (Apr 2023), some core libraries announced the upcoming abandonment of C++03 support as part of a plan to reduce code base sizes, maintenance costs, and internal dependencies on "polyfill" components. This initiative has a cascading effect on dependent libraries that is still ongoing.

- Alongside C++03 support drop, many libraries have been updated to reduce the number of internal dependencies (that even were, in some cases, cyclic). The figure shows the cumulative histograms of the number of dependencies for Boost libraries in versions 1.66 (2017), 1.75 (2020) and 1.85 (2024):

- Official CMake support for the entire Boost project was announced in Oct 2023. This support also allows for downloading and building of individual libraries (and their dependencies).

- On the same front of modular consumption, there is work in progress to modularize B2-based library builds, which will enable package managers such as Conan to offer Boost libraries individually. vcpkg already offers this option.

- Starting in July 2023, boost.org includes a search widget indexing the documentation of all libraries. The ongoing MrDocs project seeks to provide a Doxygen-like tool for automatic C++ documentation generation that could eventually support Boost authors —library docs are currently written more or less manually in a plethora of languages such as raw HTML, Quickbook, Asciidoc, etc. There is a new Boost website in the works scheduled for launch during mid-2024.

Where is Boost headed? It must be stressed again that the project is a federation of authors without a central governing authority in strategic matters, so the following should be taken as an interpretation of detected current trends:

- Most of the recently added libraries cover relatively specific application-level domains (networking/database protocols, parsing) or else provide utilities likely to be superseded by future C++ standards, as is the case with reflection (Describe, PFR). One library is a direct backport of a C++17 standard library component (Charconv). Boost.JSON provides yet another solution in an area already rich with alternatives external to the standard library. Boost.LEAF proposes an approach to error handling radically different to that of the latest standard (std::expected). Boost.Scope implements and augment a WG21 proposal currently on hold (<experimental/scope>).

- In some cases, standard compatibility has been abandoned to provide faster performance or richer functionality (Container, Unordered, Variant2).

- No new library supports C++03, which reduces drastically their number of internal dependencies (except in the case of networking libraries depending on Boost.Asio).

- On the other hand, most new libraries are still conservative in that they only require C++11/14, with some exceptions (Parser and Redis require C++17, Cobalt requires C++20).

- There are some experimental initiatives like the proposal to serve Boost libraries as C++ modules, which has been met with much interest and support from the Visual Studio team. An important appeal of this idea is that it will allow compiler vendors and the committee to obtain field experience from a large, non-trivial codebase.

The current focus of Boost seems then to have shifted from standards-bound innovation to higher-level and domain-specific libraries directly available to users of C++11/14 and later. More stress is increasingly being put on maintenance, reduced internal dependencies and modular availability, which further cements the thesis that Boost authors are more concerned about serving the C++ community from Boost itself than eventually migrating to the standard. There is still a flow of ideas from Boost to WG21, but they do not represent the bulk of the project activity.

Conclusions

Traditionally, the role of standardization has been to consolidate previous innovations that have reached maturity so as to maximize their potential for industry vendors and users. In the very specific case of programming languages, and WG21/LEWG in particular, the standards committee has taken on the role of innovator and is pushing the industry rather than adopting external advancements or coexisting with them. This presents some problems related to lack of field experience, limitations to internal evolution imposed by backwards compatibility and an associated workload that may exceed the capacity of the committee. Thanks to open developer platforms (GitHub, GitLab), widespread build systems (CMake) and package managers (Conan, vcpkg), the world of C++ libraries is richer and more available than ever. WG21 could reconsider its role as part of an ecosystem that thrives outside and alongside its own activity. We have proposed a conceptual evaluation model for standardization of C++ libraries that may help in the conversations around these issues. Boost has shifted its focus from being a primary venue for standardization to serving the C++ community (including users of previous versions of the language) through increasingly modular, high level and domain-specific libraries. Hopefully, the availability and reach of the Boost project will help gain much needed field experience that could eventually lead to further collaborations with and contributions to WG21 in a non-preordained way.