After having studied addition, subtraction and multiplication of C preprocessor bignums, let us now turn to division. To gain some insight into the mechanics of the division algorithm we reproduce a division layout the way we were taught to do at school:

20553

8879265 |432

239

2392

2326

1665

369

To divide 8879265 by 432, we begin by isolating the first 3-digit group of the dividend, 887, and calculate how many times the divisor 432 makes it into it; we have that 887 = 432·2 + 23, so 2 is the first digit of our result, and we write down the remainder 23. Next, we append the next digit of the dividend, 9 , to the remainder and proceed iteratively:

- 887 = 432·2 + 23,

- 239 = 432·0 + 239,

- 2392 = 432·5 + 232,

- 2326 = 432·5 + 166,

- 1665 = 432·3 + 369

Let us assume we have a macro BN_QDIGIT_REMAINDER(bn1,bn2) that returns a sequence (q)(r), where q is the greatest digit between 0 and 9 such that bn2·q ≤ bn1 and r is the bignum representing the value bn1 − bn2·q. We can then implement the division of bn1 = (bn11)···(b1n) by bn2 (bn21)···(b2m)by folding over reverse((bn1m)···(b1n)) with the following recursive state definition:

statei = (leadi,bn2,resi);

state0 = ((bn11)···(b1m-1), bn2,empty sequence),

leadi+1 = first(BN_QDIGIT_REMAINDER(leadi,bn2)),

resi+1 = append(resi, second(BN_QDIGIT_REMAINDER(leadi,bn2))),

where the functions first and second simply return the first and second component of a sequence of size 2. This is the complete Boost.Preprocessor-based algorithm:

#define BN_DIV(bn1,bn2) \

BOOST_PP_IF( \

BN_LESS(bn1,bn2), \

BN_DIV_CASE1, \

BN_DIV_CASE2)(bn1,bn2)

#define BN_DIV_CASE1(bn1,bn2) (0)

#define BN_DIV_CASE2(bn1,bn2) \

BN_DIV_POSTPROCESS( \

BOOST_PP_SEQ_TAIL(BN_DIV_STATE_RESULT(\

BOOST_PP_SEQ_FOLD_LEFT( \

BN_DIV_OP, \

( \

(0) \

BOOST_PP_SEQ_FIRST_N( \

BOOST_PP_DEC(BOOST_PP_SEQ_SIZE(bn2)),bn1)) \

(bn2) \

((~)), \

BOOST_PP_SEQ_REST_N( \

BOOST_PP_DEC(BOOST_PP_SEQ_SIZE(bn2)),bn1)))))

#define BN_DIV_POSTPROCESS(result) \

BOOST_PP_IF( \

BOOST_PP_EQUAL(0,BOOST_PP_SEQ_ELEM(0,result)), \

BOOST_PP_SEQ_TAIL, \

BN_DIV_POSTPROCESS_CASE2)(result)

#define BN_DIV_POSTPROCESS_CASE2(result) result

#define BN_DIV_OP(s,state,x) \

BN_DIV_OP_IMPL( \

BN_QDIGIT_REMAINDER( \

BN_DIV_STATE_LEAD(state)(x), \

BN_DIV_STATE_BN2(state)), \

BN_DIV_STATE_BN2(state), \

BN_DIV_STATE_RESULT(state))

#define BN_DIV_OP_IMPL(qr,bn2,result) \

(BOOST_PP_SEQ_ELEM(1,qr)) \

(bn2) \

(result(BOOST_PP_SEQ_ELEM(0,qr)))

#define BN_DIV_STATE_LEAD(state) BOOST_PP_SEQ_ELEM(0,state)

#define BN_DIV_STATE_BN2(state) BOOST_PP_SEQ_ELEM(1,state)

#define BN_DIV_STATE_RESULT(state) BOOST_PP_SEQ_ELEM(2,state)

We are then left with the task of implementing the macro BN_QDIGIT_REMAINDER(bn1,bn2). A brute-force approach is to try the digits 9,8,...,0 until we met the first one satisfying bn2·q ≤ bn1, but this is unnecesarily wasteful: we find in Brinch Hansen's paper "Multiple-length division revisited" the following nice theorem: if bn1 and bn2 are represented in such a way that length(bn1) = length(bn2) +1 (even if that means bn1 has to be padded with some some leading zeros) and we define

qe =min(9, floor((bn11·100 + bn12·10 + bn13)/(bn21·10 + bn22))),

then

q ≤ qe ≤ q + 1,

that is, we can use the first three digits of bn1 and the first two digits of bn2 to estimate q and will be off at most by one:

#define BN_QDIGIT_REMAINDER(bn1,bn2) \

BN_QDIGIT_REMAINDER_IMPL( \

BN_ZERO_SEQ( \

BOOST_PP_IF( \

BOOST_PP_LESS_EQUAL( \

BOOST_PP_SEQ_SIZE(bn1), \

BOOST_PP_SEQ_SIZE(bn2)), \

BOOST_PP_INC( \

BOOST_PP_SUB( \

BOOST_PP_SEQ_SIZE(bn2), \

BOOST_PP_SEQ_SIZE(bn1))), \

0))bn1, \

bn2)

#define BN_ZERO_SEQ(n) \

BOOST_PP_REPEAT(n,BN_ZERO_SEQ_OP,~)

#define BN_ZERO_SEQ_OP(z,n,data) (0)

#define BN_QDIGIT_REMAINDER_IMPL(bn1,bn2) \

BOOST_PP_IF( \

BOOST_PP_LESS(BOOST_PP_SEQ_SIZE(bn2),2), \

BN_QDIGIT_REMAINDER_CASE1, \

BN_QDIGIT_REMAINDER_CASE2)(bn1,bn2)

#define BN_QDIGIT_REMAINDER_CASE1(bn1,bn2) \

BN_QDIGIT_REMAINDER_CASE1_RETURN( \

BOOST_PP_ADD( \

BOOST_PP_MUL(BOOST_PP_SEQ_ELEM(0,bn1),10), \

BOOST_PP_SEQ_ELEM(1,bn1)), \

BOOST_PP_SEQ_ELEM(0,bn2))

#define BN_QDIGIT_REMAINDER_CASE1_RETURN(n1,n2) \

(BOOST_PP_DIV(n1,n2))((BOOST_PP_MOD(n1,n2)))

#define BN_QDIGIT_REMAINDER_CASE2(bn1,bn2) \

BN_QDIGIT_REMAINDER_CASE2_TEST( \

bn1,bn2, \

BN_DIV_RRR_DD( \

BOOST_PP_ADD( \

BOOST_PP_MUL(BOOST_PP_SEQ_ELEM(0,bn1),10), \

BOOST_PP_SEQ_ELEM(1,bn1)), \

BOOST_PP_SEQ_ELEM(2,bn1), \

BOOST_PP_SEQ_ELEM(0,bn2), \

BOOST_PP_SEQ_ELEM(1,bn2)))

#define BN_QDIGIT_REMAINDER_CASE2_TEST(bn1,bn2,q) \

BN_QDIGIT_REMAINDER_CASE2_TEST_IMPL(bn1,bn2,q,BN_DIGIT_MUL(bn2,q))

#define BN_QDIGIT_REMAINDER_CASE2_TEST_IMPL(bn1,bn2,q,bn2q) \

BOOST_PP_IF( \

BN_LESS_WITH_ZERO(bn1,bn2q), \

BN_QDIGIT_REMAINDER_CASE2_TEST_IMPL_CASE1, \

BN_QDIGIT_REMAINDER_CASE2_TEST_IMPL_CASE2)(bn1,bn2,q,bn2q)

#define BN_QDIGIT_REMAINDER_CASE2_TEST_IMPL_CASE1(bn1,bn2,q,bn2q) \

(BOOST_PP_DEC(q))(BN_SUB(bn1,BN_SUB(bn2q,bn2)))

#define BN_QDIGIT_REMAINDER_CASE2_TEST_IMPL_CASE2(bn1,bn2,q,bn2q) \

(q)(BN_SUB(bn1,bn2q))

#define BN_LESS_WITH_ZERO(bn1,bn2) \

BOOST_PP_IF( \

BOOST_PP_EQUAL(BOOST_PP_SEQ_ELEM(0,bn1),0), \

BN_LESS_WITH_ZERO_CASE1, \

BN_LESS)(bn1,bn2)

#define BN_LESS_WITH_ZERO_CASE1(bn1,bn2) \

BN_LESS(BOOST_PP_SEQ_TAIL(bn1),bn2)

The code above invokes the macro BN_DIV_RRR_DD(r1r2,r3,d1,d2) whose behavior is defined by:

BN_DIV_RRR_DD(r1r2,r3,d1,d2) = floor((r1r2·10 + r3)/(d1·10 + d2)).

The operation cannot be done directly because Boost.Preprocessor-based arithmetics are limited to numbers between 0 and BOOST_PP_LIMIT_MAG = 256. Instead, floor(r1r2/d1) is used as an initial upper-bound estimation which is then adjusted to the correct value using arithmetic operations that never exceed the BOOST_PP_LIMIT_MAG limit. The implementation of BN_DIV_RRR_DD is somewhat cumbersome but conceptually simple.

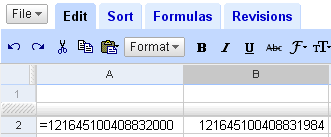

The complete implementation of all four arithmetic operations --addition, subtraction, multiplication and division-- is provided. Cpp bignum arithmetic is not very fast; for instance, given the following calculation of 30!:

BN_TO_LITERAL(

BN_MUL((3)(0),BN_MUL((2)(9),BN_MUL((2)(8),BN_MUL((2)(7),

BN_MUL((2)(6),BN_MUL((2)(5),BN_MUL((2)(4),BN_MUL((2)(3),

BN_MUL((2)(2),BN_MUL((2)(1),BN_MUL((2)(0),BN_MUL((1)(9),

BN_MUL((1)(8),BN_MUL((1)(7),BN_MUL((1)(6),BN_MUL((1)(5),

BN_MUL((1)(4),BN_MUL((1)(3),BN_MUL((1)(2),BN_MUL((1)(1),

BN_MUL((1)(0),BN_MUL((9) ,BN_MUL((8) ,BN_MUL((7),

BN_MUL((6) ,BN_MUL((5) ,BN_MUL((4) ,BN_MUL((3),

(2))))))))))))))))))))))))))))))

it takes around 20 seconds for this expression to expand to

265252859812191058636308480000000

using GCC 3.4.4 for Cygwin in a Windows box with a 1.66 GHz Intel Core 2 Duo and 1 GB RAM. Within the same environment, the long division

BN_TO_LITERAL(

BN_DIV(

(2)(6)(5)(2)(5)(2)(8)(5)(9)(8)(1)(2)(1)(9)(1)(0)(5)

(8)(6)(3)(6)(3)(0)(8)(4)(8)(0)(0)(0)(0)(0)(0)(0),

(1)(8)(3)(7)(0)(8)(0)(0)))

takes around 12 seconds. In a later entry, we will explore ways to improve the performance of bignum multiplication and division through memoization techniques.